Audiovisual Sampling: Recording and Sequencing

Thus far in the project, much of the sonic aesthetic has consisted of ambient, atmospheric or drone devices with rhythm playing an adorning role rather than a foundational one. This direction has been in line with my main musical sensibilities as an ambient / long form producer and has allowed timbral and temporal control of sound with movement interaction to take centre stage, exploring the affordances in performance allowed by the added medium of movement. However, now coming into the later stages of the practical element of the project, I have become increasingly curious as to how I may create dynamism and contrast through the introduction of rhythmic elements.

In following Louise Harris’s proposition of egalitarian engagement of visual and sonic mediums as a prerequisite for audiovisuality, I have been particularly considerate of how rhythmic devices may be engaged that span both forms - ultimately exploring the audiovisual tenet of synchresis (Dunphy 2020, Harris 2021). My most exciting idea to pursue these heuristics has become the construction of an audiovisual sampler that can record video caches to be recalled by a sequencer. The sequencer output could then also be routed to a sound sequencer in the modular, resulting in synchronised sequencing of auditory and visual material.

You may notice a choice here to not directly tie the visual material to the sonic by recording a video cache with accompanying audio; this is intentional. Recording the samples separately (specifically, recording the visual in Touchdesigner and the sound in a hardware sampler) allows:

Alternate visual/sonic combinations to be explored in real time

Independent processing of samples in the modular (filter, FX etc)

All audio to be in the modular, all visual to be in Touchdesigner, hence minimising unnecessary complications and CPU load.

Over the past few days, I have managed to build a stable and effective version of this system in Touchdesigner. Compared to previous attempts to build a visual sampler, this has been especially successful due to my deployment of ‘Cache’ TOPs to manage video buffers rather than writing and reading video files on the disk (prior experiments have shown that reading video from disk on command is not one of Touchdesigner’s strengths).

One channel of the visual sampler

Pictured above is one channel of the sampler, which includes ‘Record’ and ‘Play’ operations. Separate MIDI notes from the sequencer are routed to initiate each operation. A high signal in the ‘Record’ input begins cache recording and begins a timer, the output of which determines the length of the sample. A low signal stops the recording and the timer.

On the ‘Play’ side, a high signal begins a timer that directs the ‘Cache’ TOP to scan through its cache in reverse; resulting in the recorded clip. The high ‘Play’ signal also sets the output ‘Level’s opacity to ‘1’, ensuring darkness between clip iterations.

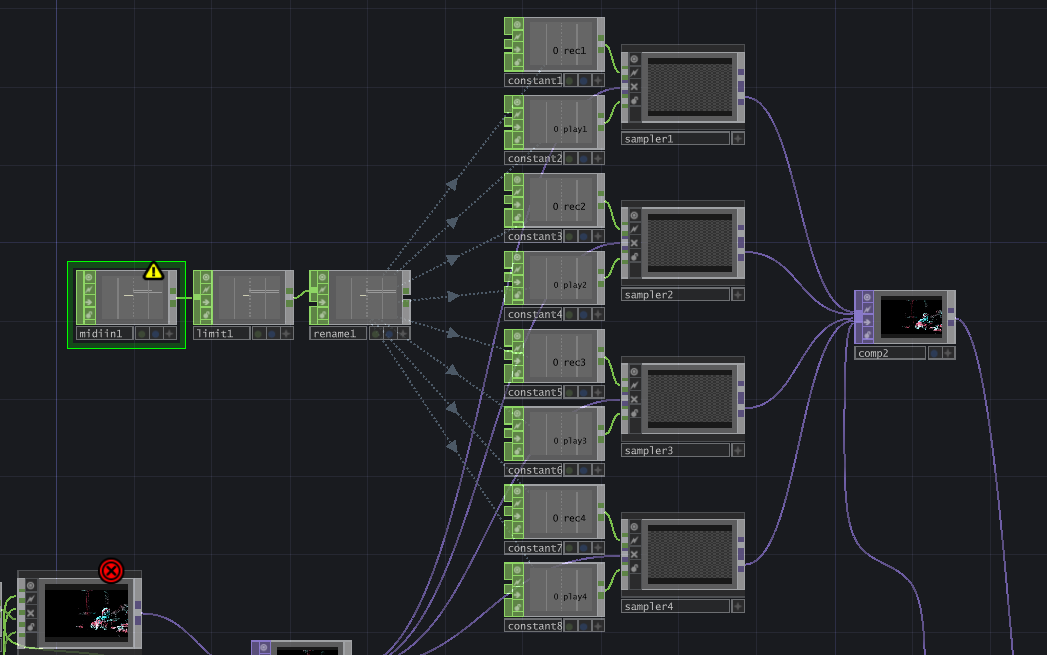

Four sampler components with MIDI routing in and video outputs combined in a Composite TOP.

‘High’ and ‘low’ signal terminology is used here to highlight that the system is optimised for gates rather than triggers. As the samplers are being performed by a sequence provided by a hardware Beatstep Pro sequencer through MIDI that is also sequencing sound, maintaining the flexibility of clip length provided by gate inputs ensures that longer instances of a sound will be matched by a longer instance of the video clip.

Above is a demonstration of the sampler in operation using drum sounds unrelated to the video clips. I do note that association is created between the stimulus simply through relation in time, which is interesting as a phenomenon and informative as a system feature. However, the larger idea for the sampler in performance is that body visualisation clips and segments of audio may be recorded and recalled together. With recordings taken during explorations of drones, the slow-moving, gradual nature of ambient performance can then be reworked into a synchronous rhythmic section of higher intensity. The recalling of body visualisations is captivating and unique, even just in technical demonstrations, opening up possibilities to explore themes of teleportation, instances, energy transfer etc… The inclusion of additional bodies to interact with visually is a large step both conceptually and technically. These ideas are explored in the following demonstration, with timbral control over the first third, a somewhat clunky exhibition of sample treatment in the middle and then a complete sampler + live control exhibition in the last third.

All in all, the sampling system has provided an elegant solution to the challenge of audiovisually fused rhythm while exposing another unique technique made possible by Touchdesigner and music hardware. This small system combines body visualisation with movement interaction and long-form timbral control with pre-determined rhythmic sequences, widening the possible range of audiovisual performance with the system. It also uncovers a new layer of the system; one that records and re-interprets the primary layer of timbral and temporal control of sonic atmospheres. I look forward to exploring more nuanced and creative ways of operating the sampler, which could include automated recording cycles capturing new material continuously, or inserting audio-reactivity to visual effects further down the line.