Touchdesigner: The Key to an Integrated System

Unfortunately, my plans for this week have been stymied by an intense flu and I have been unable to conduct an experiment in the way that I had hoped. However, in this down time, my mind has turned to some of the significant elements of the system that have received little insight in the journal thus far. Most significantly among them is the key role that Derivative’s Touchdesigner plays as the main software within the system. In this post, I will back up a little in the project to highlight how key Touchdesigner is to its success and what features enable it to be the main hub of the system.

The software itself is a node-based creative coding environment geared towards the real-time creation of visual material with significant capabilities for interaction, generative techniques and data translation. Touchdesigner provides four libraries of nodes designed for the processing of data (CHOPs, DATs), the manipulation of images/video (TOPs), 3D operations (SOPs), materials and shaders (MATs) and higher order operations (COMPs). By combining these nodes (or ‘operators’) incredible potential for advanced interactive artworks is uncovered. Operators can analyse audio, create 3D environments, manipulate video and interpret almost any medium into data. Additionally, CHOPs may import or export OSC, MIDI, DMX and a range of other protocols. It’s these features that have enabled me to interpret the Kinect’s skeleton tracking, communicate with Wekinator via OSC and then output MIDI to the modular synthesiser. More specifically, a number of features have influenced the way this system has come together and defined its capabilities.

Native Kinect Support

To begin with, the native support that Touchdesigner features for Microsoft’s Kinect products is positively imperative to the design of this system. In fact, it is this feature that provided the impetus to explore movement interaction for me in the first place, initially with a Kinect v1 and VCV Rack. Touchdesigner’s library of CHOPs includes dedicated Kinect operators, which when combined with a Kinect v2 supply skeleton tracking for 25 body points across x, y and z axis as well as face tracking, bone data, colour space positions and depth space positions. Additionally, the ‘Kinect’ TOP provides output from the device’s cameras; colour, depth and infrared. Without Touchdesigner, these data streams are substantially harder to reach and interpret. The ability to access this data so conveniently in a software with so much creative potential is an element of this project that can not be understated. Within the system, the Kinect CHOP is the first element of the digital processing network, before data is processed with a series of scaling processes within other CHOPs. The Kinect TOP also supplies the basis for any body visualisation, with 2D silhouettes or 3D point clouds offering effective visualisations to build upon.

The Kinect CHOP leading into a series of ‘Select’ and ‘Math’ chops that calculate joint positions in relativity to the hips, as recommended by Tim Murray-Browne.

OSC and MIDI Support

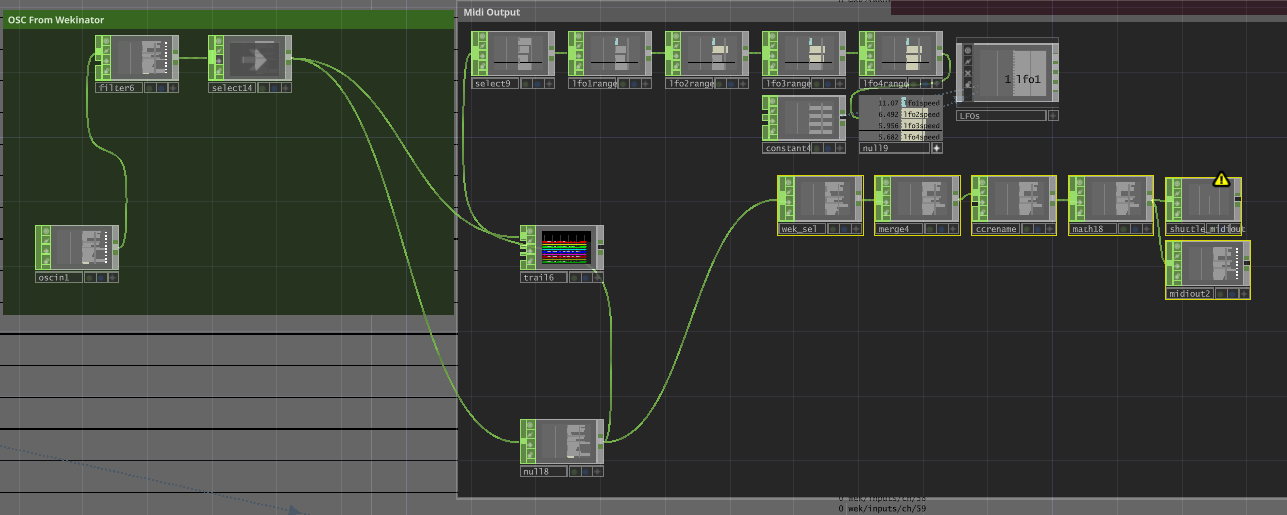

This project relies upon effective communication between various software and hardware products, requiring reliable processing of a range of protocols. Touchdesigner’s stability in these processes is what has allowed the system to operate in real time, as data is passed between Wekinator, the synthesiser, and then back into the system as audio. After body tracking data is scaled and calculated as either relative or absolute, it is then merged into a corpus of data to be exported to Wekinator via OSC. This is achieved with a series of DATs that craft the OSC message Wekinator is expecting, before being exported by an ‘OSC Out’ chop via a network to Wekinator. Wekinator receives this corpus of data and processes it to create a smaller set of ‘latent’ values generated by machine learning models, which may then be brought back into Touchdesigner as OSC. Now processing data produced by Wekinator, Touchdesigner is again an essential component to convert these values to MIDI. This process also unveils new possibilities across another layer of mapping, as latent values may be directly converted, onset detected to create triggers or even assigned to control various temporal measures such as LFO rates. However, by and large I have translated them directly to MIDI which may be sent to the modular synthesiser’s ‘Shuttle Control’ MIDI to CV converter module via a ‘MIDI Out’ CHOP. With the latent values fed into the synthesiser, movement interactive music is made and the project proceeds, however Touchdesigner’s work is not yet finished. The audio-reactive and visual elements are still yet to be determined!

OSC message crafting through a series of DATs, adding the required ‘wek/inputs’ prefix before a string of 75 floats.

OSC returning from Wekinator containing 16 latent values before being scaled to MIDI standard and exported. Note the ‘LFO’s COMP, which contains several LFOs that may be inserted into the mapping chain to allow temporal parameter control.

Audio Reactivity and Visual Generation

As sound is fed from the synthesiser back into my audio interface, it is again routed into Touchdesigner’s ‘Audio Device In’ CHOP to become a defining factor of the system’s visual arm. The software includes a slew of operators and objects for audio analysis, including spectral analysis, beat detection and envelope following, as well as audio effects such as EQ and compression. These operators can be combined to scale an audio signal, compress dynamics, analyse the spectrum and visualise frequencies as they arise in a myriad of ways. In my audiovisual performances, these techniques have been the building blocks of synchronised visuals. In this project, cohesive performance reaches new degrees when combined with the visual data extracted from the Kinect’s cameras, allowing the body to contribute foundational data to both the sonic and visual elements. Final products might be the body depicted by a particle system that reacts to transients in the music while changing colour depending on the sound’s spectral content, or a silhouette of the body distorting and interacting with a scrolling spectral map. Finally engaging with the heart of what Touchdesigner was designed to achieve, the possibilities are virtually endless.

A test export during body visualisation work using the Kinect’s depth camera and audio spectrum analysis to visualise kicks and snares.

I hope this has provided a little more insight into the workings of the system and the role Touchdesigner plays as the intermediary between the various elements. In writing this, I have ruminated on to what extent my education in Touchdesigner has influenced my ambitions for integrating mediums into a coherent performative form, or whether my taking to the software has stemmed from these goals… In either case, the tool is an essential part of my kit and has played a significant role in demonstrating possibilities that combine the physical world, the sonic realm and digital visualisation into interactive experience. I can’t help but feel that my relationship with it is demonstrative of the broader dialectic between the arts and technology as a whole.