Parallel Developments

This week has seen a range of developments in the system across the sonic, visual and audiovisual elements. As all the elements of the project are at a functional point, advancing them in parallel feels like the most comfortable and cohesive way to proceed. This workflow is also proving helpful in conceiving the system holistically, as each element becomes increasingly intertwined. Sonic devices have been decided, effective MIDI processes discovered and Wekinator recording techniques explored, while performative potential has been expanded and visual accompaniment developed.

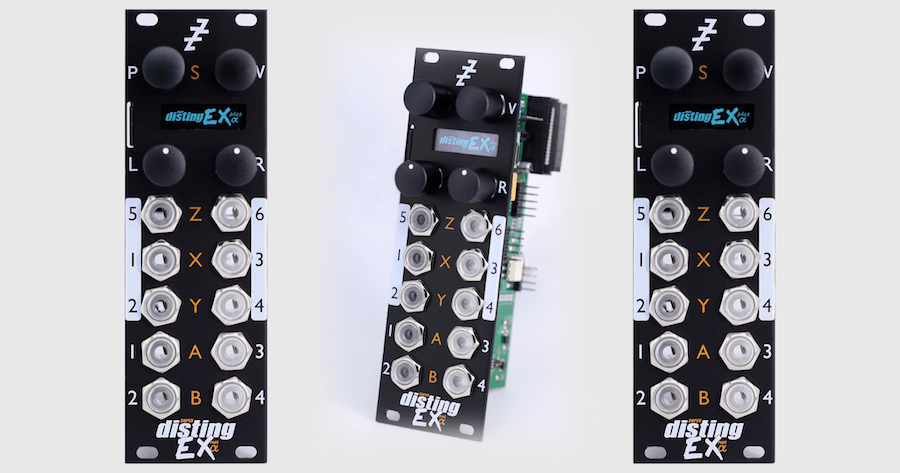

Musically, the modular patch development has largely been concerned with deciding on the most effective use of the Expert Sleepers Super Disting EX module. This module is unique as its totally digital multi-purpose design utility, audio effect and audio generator applications, or ‘algorithms’. The module’s sophisticated digital architecture also allows full MIDI input, which opens up parameter control outside the MIDI-CV conversion in the Shuttle Control module. Early experimentation this week investigated the Dream Machine algorithm, which facilitates drone chord creation in Just Intonation in the style of minimalist composer La Monte Young. While timbral variation and the amplitude of harmonics in the drone provided effective modulation targets, this algorithm was not suited to tonal variation and did not provide enough range to support a satisfying interactive experience. This drove me to re-engage the Resonator algorithm to fulfil a similar purpose - namely a harmonic device capable of a wide range of timbres with reliably consonant tonal variation. Though I have used this algorithm to produce melodic plucks in previous patches, I have since discovered its potential as a harmonic device. By tuning the resonant filters to a chord, eg. Cmaj7, the spectral characteristics of the input audio will reveal certain harmonic spaces in this chord, effectively producing different inversions up and down the harmonic space. In light of this, I could elegantly ‘reveal’ different harmonic sections of the emergent chord by modulating a filter on the audio prior to the Resonator, namely the digital filter within my Bitbox sampler module. When combined with movement-guided timbral modulations of the Position, Decay and Brightness parameters with MIDI, an effectively wide range of timbres became possible. Additionally, a clocked, 64/1 LFO applied to the Chord input of the Disting introduced structural chord changes.

The ‘Disting EX’ Module. Image from Expert Sleepers.

The technique of filtering the sample being fed to a resonator was doubled on the sample being sent to the Spectral Rotating Resonator, which produces a more limited range of tones; mainly sine waves. However, when the amplitude of each resonant band is modulated by LFOs (in this case rising saw waves), the harmonic drone of the SMR becomes a more ephemeral, shifting melody. Modulating the filter on the sample being fed to the SMR enabled higher or lower notes in the harmony to be revealed depending on body orientation. In order to share spectral space between these two resonant devices, a single Wekinator module was trained to control both filters on the two channels with one filter’s attenuverter inverted. This ensures that as one filter opens, the other closes, forcing the two resonant chords to never occupy the same spectral space but instead smoothly reveal new spectral areas as the body moves.

These two examples from the patch exemplify another significant phenomenon to be considered, which is the delineation between controlled parameters and automatic parameters. Deciding which parameters should be controlled by models (i.e. the movement interaction) and which should continue independently, either through cyclical modulation or random, determines how the system might maintain musical structure or inform the interactive performer. As alluded to above, I attached the chord changes and SMR band envelopes to cyclical clocked modulation with LFOs. This ensures that musical structure will proceed regardless of body movement, allowing the performer to adorn the structure by following it rather than determining it. Apparent both in my own use and with Lauren, this seems to be an effective solution to creating a cohesive interactive performance.

With this newly refined sonic palette, I began experimenting with live input into the system with an autoharp and guitar. I had been very curious to try this, as performing a physical instrument along with the system opens new and interesting possibilities due to the movement interaction element allowing intentional modulation of parameters without releasing the acoustic instrument. Simply put, a ‘hands free’ accompaniment. Despite the limited space in my studio, I was able to perform a rudimentary improvisation in which my body movements orchestrated variations in tone and timbre, over which the performed autoharp could be improvised. Interacting with a physical ‘prop’ also helped contextualise my movements, both as an embodied experience and as a viewed spectacle. This highlights a significant potential for the system, which prove a satisfactory purpose in itself.

Introducing so many new parameters, I found it prudent to record a new set of examples in Wekinator. Unfortunately, Wekinator can not ‘run’ and ‘record’ simultaneously, meaning that examples recorded to new models can not be influenced by the performance of models with older examples. This provides a challenge when the designer wishes to compose models to react cohesively, to which solutions might be in-depth prior planning or a more documented workflow. In my case, the software is fast enough to repeatedly start from scratch, which if anything just allows more opportunity for experimentation and reflection.

In this quick and intuitive working state, I was once again drawn to the ‘randomise’ function within the software. This function randomly sets the output values of the models, thus creating a random assortment of sonic parameters resulting in a random ‘sound’ in the modular. Though this process risks feeling like a compositional cheat, it undoubtedly helps finding new sonic terrain that I may not have arrived at otherwise. By randomising the parameters, fine tuning if needed, then interpreting the ‘sound’ as a pose, a wider range of sonic/movement palette can be created much faster and more intuitively than recording movement/sound sequences or pre-composed ideas. Following this experience, I believe the best use of the randomise function is to record samples in the extremes of the movement range, such as at the edges of the sensor range or with drastic poses, then fill in manually composed sound/pose combinations in areas where predictability is key. Manually composed examples have included a nearly-silent sound patch at a neutral pose in the centre of the room, or an extreme high-filtered patch with significant reverb with a hands-above-head pose at the rear of the room. This method of crafting examples allows predictable/neutral sound/pose combinations to be in easily replicable places in the room, with more extreme sounds accessed through exploration around the room and with the body, making for a user experience that is comfortably exploratory with regions of ‘safety’ that may be returned to.

In the visual / audiovisual realm, my first movement-interactive patch received a revisit. Particle systems are a staple of real-time visual design, almost trope-like in their prevalence, though I would attribute this to their efficacy and aesthetic appeal before anything else. As they form such a basis for interactive visual design, I think they are a very relevant application through which to explore movement interaction for performance. I started fresh by re-building my particle system in the latest ParticlesGPU Touchdesinger component from the Palette menu, which features updated physics and other optimisations to allow larger systems and refined control. Through experimentation, I identified parameters that allowed significant control over the structure, speed and directional flow of the particles. With limited Wekinator models, it is better to modulate a small collection of significant parameters than provide control of them all.

Models in Wekinator (left) and the Turbulence Parameters they control in Touchdesigner (right).

These resulted as the X, Y and Z directional Turbulence parameters as well as the Turbulence Magnitude and Turbulence Period. By training Wekinator models dedicated to these parameters, I was able to link combinations of sonic control parameters to the varying kinds of Turbulence, thus creating an audiovisual ‘instrument’ in which semantic congruence between the sonic and visual material is achieved by a common modulation source. However, in this case, the reactions of the visual material to the body were not totally intuitive. Since the visual material has more potential for meaningful or familiar interaction, such as ‘waving left = particles move left, waving up = particles ascend, stillness = particles halting’, the more arbitrary mapping of Turbulence parameters to body poses through Wekinator resulted in less realistic interactions. Reflecting on this, I think that the particle system control may be more suited to 1:1 mapping straight from body points rather than the latent values produced by Wekinator. In theory, this would provide a more intuitive interactive experience with the visuals. However, would this then reduce the audiovisual cohesiveness? Or provide more guidance on linking sonic results to poses? This is looking like the next avenue of inquiry.

A studio recording of audiovisual interactivity through Wekinator. Models trained on common data and assigned to individual sonic and visual parameters make for a semantically congruent performance of both mediums.